ncdu-import

Figuring out what’s taking up space is a well-known issue, with a variety of great tools for it… if we’re talking about files on a local hard drive.

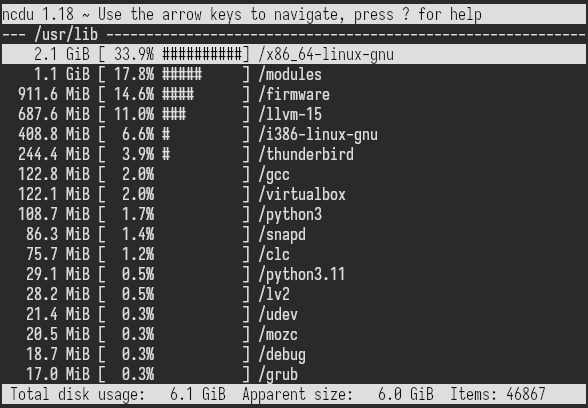

Tools like the textual ncdu and the graphical

baobab let you start with a

high-level summary, and dive into specific directories to find out what’s taking

up all of the space.

However, sometimes what you have is on a cloud storage system, which is happy to

bill you for space your files take, but the UI doesn’t make it super-easy to

figure out which directories take up that storage. For example, with Google

Cloud Storage, you can use rclone ncdu, but my modest backup bucket had it

consistently timing out. For this purpose, the recommended path appears to be

Storage Inventory, which will provide you with a CSV listing of all of the

files in your bucket. The apparent recommendation is to analyze it using a

custom-crafted BigQuery query, which is nowhere near as handy as ncdu.

| |

Fortunately, ncdu has an import/export feature, for those slow scans. ncdu -o foo.json will save such a report (slowly), and ncdu -f foo.json will display

it (quickly). So, how about if we cheat, and convert our CSV of

files-in-the-cloud to ncdu-compatible JSON?

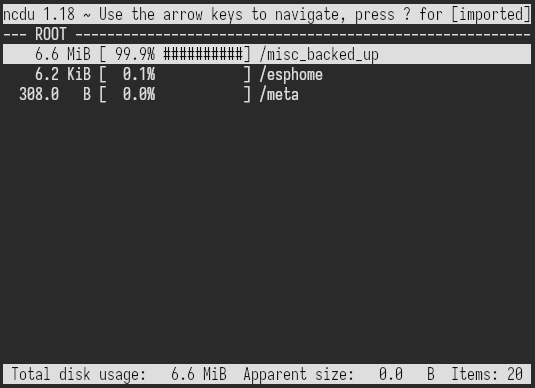

That’s where ncdu-import comes in. Bring it a CSV file which has a “path”

column and a “size” column (tell it what the columns are), and it’ll spit out a

JSON file loadable by ncdu for quick and convenient analysis. You can look at

the testdata dir to get a few examples of what it’s doing.

ncdu showing output ncdu-import on the sample CSV above